A recent job opening at Pindrop Security, a voice authentication startup, attracted an unusual applicant among a flood of candidates.

The applicant, identified only as Ivan, is a Russian coder believed to have the necessary skills for a senior engineering position. However, during a video interview last month, discrepancies in Ivan’s facial expressions caught the eye of Pindrop’s recruiter, leading to suspicions about his authenticity.

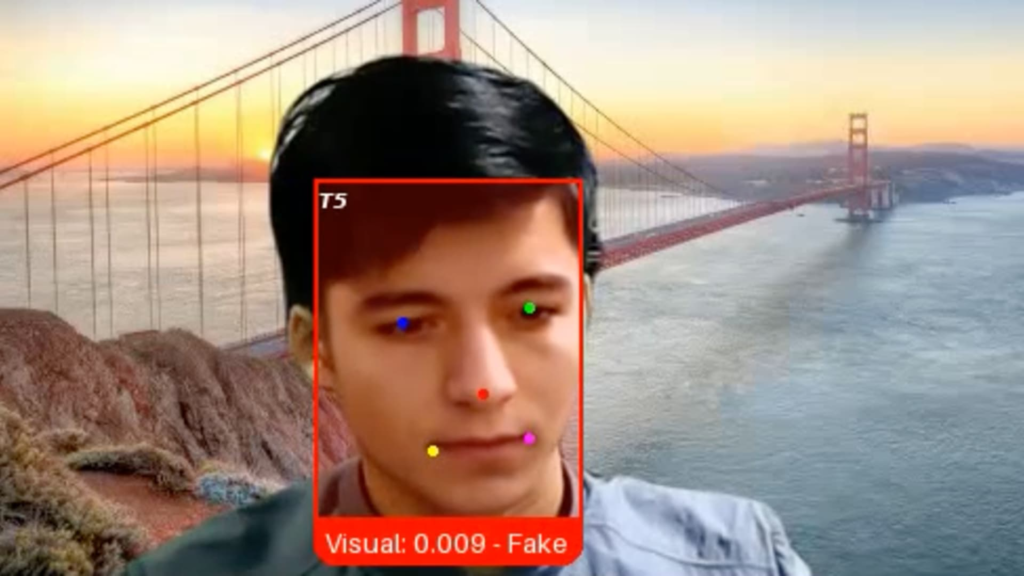

Pindrop later tagged this candidate “Ivan X,” revealing that he was allegedly using deepfake technology and generative AI tools to feign his identity, as indicated by Pindrop’s CEO and co-founder, Vijay Balasubramaniyan.

“Generative AI has blurred the lines between human and machine,” Balasubramaniyan remarked. “We observe individuals leveraging fabricated identities, faces, and voices to obtain employment, occasionally even orchestrating a face swap with another person who appears for the job.”

While companies have historically defended against hackers and other external threats, there’s now a new challenge: job applicants employing AI tools to fabricate identities, including false photo IDs and fabricated employment histories to deceive potential employers.

According to research and advisory firm Gartner, the emergence of AI-generated profiles suggests that by 2028, one in four job candidates globally could be fabricated.

The risks associated with hiring a fake candidate can be significant, depending on the individual’s motivations. Once employed, these impostors could install malware to extort companies or could siphon off customer data, trade secrets, or funds. In many instances, the deceitful employees are simply collecting wages that they would not be able to earn otherwise, Balasubramaniyan noted.

‘Massive’ increase

Experts in cybersecurity and cryptocurrency have observed a considerable rise in the number of fraudulent job applicants. The remote hiring nature of these industries makes them particularly enticing to bad actors, they noted.

Ben Sesser, CEO of BrightHire, mentioned that he first became aware of the problem a year ago, but the number of fraudulent applications has “ramped up massively” this year. BrightHire aids over 300 clients across finance, tech, and healthcare sectors in evaluating candidates through video interviews.

“Humans are typically the weak link in the cybersecurity chain, and the hiring process involves numerous hand-offs and interactions,” Sesser explained. “This process tends to become a vulnerable point for exploitation.”

The issue spans beyond the tech domain. According to the Justice Department, over 300 U.S. companies have unwittingly hired impostors connected to North Korea for IT roles, including a well-known national television network, a defense contractor, and several Fortune 500 companies.

The impostors utilized stolen American identities to apply for remote positions and employed various techniques to obscure their true locations. Allegations suggest that millions of dollars in wages were sent back to North Korea to fund the nation’s weapons program.

This case, which involved a network of alleged operatives—including an American citizen—has shed light on what authorities believe to be an extensive international network of thousands of IT workers affiliated with North Korea. The DOJ has since initiated additional cases against North Korean IT workers.

A growth industry

The phenomenon of fake job applicants is further illustrated by the experience of Lili Infante, CEO of CAT Labs. Situated at the crossroads of cybersecurity and cryptocurrency, her Florida-based startup is particularly attractive to malicious actors.

“Each time we post a job listing, we receive applications from about 100 North Korean spies,” Infante revealed. “Their resumes often appear impressive, filled with relevant industry keywords.”

Infante’s company collaborates with an identity verification firm to filter out fraudulent candidates, highlighting an emerging area within the industry that includes companies like iDenfy, Jumio, and Socure.

The landscape of fake job applicants has expanded beyond North Koreans, now involving criminal organizations from Russia, China, Malaysia, and South Korea, according to Roger Grimes, a computer security consultant with extensive experience.

Ironically, some of these impostors could outperform many legitimate employees, Grimes noted.

“At times, they may perform poorly, but there are instances where they excel so impressively that I’ve had people express regret over having to let them go,” Grimes stated.

His employer, cybersecurity firm KnowBe4, recently revealed that it accidentally hired a North Korean software engineer.

This worker manipulated a stock photo and utilized a valid yet stolen U.S. identity to navigate background checks, successfully completing four video interviews before being flagged due to suspicious activities associated with his account.

Fighting deepfakes

Despite notable incidents publicized by the DOJ, many hiring managers remain largely unaware of the threats posed by fake job candidates, as BrightHire’s Sesser has observed.

“Their responsibilities typically encompass talent strategy and other crucial tasks, but they haven’t historically been front-line security personnel,” he explained. “There’s a tendency to believe that they aren’t encountering these issues, but it’s more likely they are simply not aware of what’s happening.”

As deepfake technology continues to advance, avoiding deception will become increasingly challenging, Sesser warned.

Regarding “Ivan X,” Balasubramaniyan shared that Pindrop employed a newly developed video authentication program to ascertain his true identity as a fraudulent applicant.

Though Ivan asserted he was based in western Ukraine, his IP address revealed that he was in a location thousands of miles to the east, potentially a military facility in Russia near the North Korean border, as reported by the company.

Pindrop, with backing from Andreessen Horowitz and Citi Ventures, was established over a decade ago to detect fraud in voice interactions, yet may soon branch into video authentication, having clients among some of the largest U.S. banks, insurers, and healthcare firms.

“We can no longer rely on our eyes and ears,” Balasubramaniyan said. “Without technology, you’re no better off than a monkey tossing a coin at random.”